Indicator Guidance¶

The structure of this section of the handbook parallels the structure of the indicator’s various fields in the survey tool. Here researchers will find guidance for common concerns related to these various components.

The primary indicators in the Global Data Barometer are framed as to what extent questions. Indicators are made up of a number of discrete sub-questions, which in total will generate weighted scores on a 10-point scale. In the pilot edition, these scores are not displayed to researchers to avoid any potential bias while collecting data.

Typically, indicator sub-questions are organized in three sections: existence, elements, and extent, and each indicator has a research journal and justification and sources section.

Indicator Status and Confidence¶

Status¶

In the survey tool, at the top of each indicator is a space to indicate the status of your research. Use this to keep track of your work. Researchers can mark an indicator as “Not started,” “Draft,” or “Complete.” Reviewers can mark content as “Reviewed.” The research coordinator can view the completion status of indicators in their management dashboard.

Confidence¶

For each indicator, researchers can provide a personal assessment of their confidence in the answers they have provided. This is a useful way for researchers to indicate to reviewers whether they believe their answers to be highly accurate or if they have concerns about answers’ accuracy—for example, when researchers have not been able to confirm answers in the time allotted. Use the indicator’s research journal to briefly record any reasons for a lower confidence in the accuracy of the answer.

In some cases, researchers may also have concerns about the reliability of the data published by governments. This can be noted in the research journal or justification box. The confidence field is only for researchers to assess their confidence in the accuracy of their own answers.

Existence Sub-questions¶

Existence sub-questions ask researchers to determine whether relevant frameworks, institutions, training programs, datasets, or use cases exist. For each indicator, as part of the indicator-specific guidance, the handbook contains a “Definitions and Identification” section. This describes the governance arrangements, capabilities, types of data, or use cases the researcher should look for.

The researcher must check carefully whether anything meeting this definition exists in the country—and if so, locate the best possible examples to assess. As appropriate, the existence sub-question will then ask researchers to assess the nature of the example(s) they have identified.

When there is no evidence¶

If the researcher determines that there are no examples that correspond to an indicator—or if they cannot locate evidence of such existence—then they must explain in the research journal the research steps they took to come to this conclusion.

Additionally, researchers must provide in the justification box a short explanation and its source (if available), as well as any relevant context regarding this absence. Such context might include, for example, related evidence that did not fully match the requirements, evidence that was only available for times outside of the period defined in the study, etc.

For example...

Justification: A data protection law has been proposed a number of times by civil society, and introduced as a draft by opposition parties. However, it has no reasonable prospect of becoming law.

Source: Article describing history of campaign for data protection law in country.

In most cases, a “No” response to an existence question will mean the indicator’s other sub-questions do not need to be completed.

When information is not online¶

If the researcher finds evidence that information exists but is not available online—for example, because it is only accesible via an information request or by visiting the government agency in person—the researcher should select “information is not online.” Supporting questions will ask the researcher to explain and provide evidence about how the information can be accessed.

If the basic information that is available online is sufficient to answer any of the element or extent sub-questions, the researcher should try to do so, providing corresponding evidence. If the basic information isn’t sufficient to answer any other sub-questions, the researcher should mark those sub-questions “No” or similarly, as appropriate.

The Barometer draws on desk research and interviews, with an explicit focus on evidence that is available online. To answer sub-questions a researcher may need to conduct interviews, but the researcher shouldn’t have to visit government agencies to examine datasets—other than online—or seek additional information through formal information requests.

Locating the best example¶

The Barometer uses a bright spots design, built to handle federal systems with flexibility. This means that, unless otherwise specified in the indicator guidance, researchers should look first for national examples (e.g., national law, nationally provided dataset) from the government. If no such example is identified, or the example appears weak (e.g. the answers to many other sub-questions are “No”), the researcher should then check for the presence of sub-national examples or, when relevant, those that only apply to particular agencies.

If a sub-national example is both stronger than the national example and representative of widespread practice across the country, it can be assessed instead of the national example. This must be explained clearly in the justification box. Otherwise, the researcher should assess the national example and note any isolated sub-national examples they have identified in the justification box.

Generally, comprehensiveness issues will be addressed in an indicator’s extent sub-questions, which focus on geographic, jurisdictional, or institutional coverage.

See the section on “Handling multiple or fragmentary evidence (elements)” for guidance on how to assess element sub-questions cases when relevant data is distributed across several datasets, each of which may be of different quality.

Handling multiple or fragmentary evidence (existence)¶

Sometimes, answers to an indicator’s sub-questions will be spread out across multiple sources. For example, a relevant framework may involve multiple laws or policies, or relevant data may be organized across multiple datasets, perhaps even published by different agencies. This will vary by country. When you find this to be the case, to answer the existence question of such a framework, dataset, capacity-building program, etc., you must identify all of the relevant components in the overall justification box.

For related information, see also the section on “Handling multiple or fragmentary evidence (elements).”

Frameworks and the force of law¶

Existence sub-questions in core governance indicators ask whether relevant laws, regulations, policies, and/or guidance have the force of law. Researchers should exercise their expert judgement when deciding whether relevant laws, regulations, policies, and/or guidance have the force of law. For example, compare these two cases:

- In country X, the law creates an agency to monitor political finance and regulations empower the agency to collect structured data. The agency establishes systems to collect and publish the data. Right-to-information laws ensure a right to request data from the agency. In this case, if the agency stopped collecting or publishing data on political finance without there having been changes in regulations or law, a legal challenge could be mounted.

- In country Y, the law creates a duty for political parties to disclose details of their financing, but does not specify the format or public disclosure. The elections commission supplies an Excel template for parties to use, but does not have the power to mandate its use or publish submissions they receive. They start publishing submissions on a voluntary basis, but some parties withhold consent for their submissions to be published. Here, there is a framework in place, but significant parts of it are voluntary and lack the force of law.

Note: In sub-questions we often abbreviate “relevant laws, regulations, policies, and guidance” as “framework.” This is because the basis for collecting and publishing data is often distributed across multiple laws, regulations, policies, and guidance documents. For example, one law may empower an agency to collect data, another regulation or memorandum may specify that data should be provided in a structured form, and another law may mandate that when data is provided, it should be under open license. “Framework” is used to represent the collection of relevant laws, regulations, policies, and guidance, it does not imply that a government itself necessarily presents or understands these as a unified framework.

Element Sub-questions¶

Once the relevant frameworks, institutions, training programs, datasets, or use cases have been identified, the element sub-questions invite researchers to carry out a detailed assessment.

In general, elements are written as statements. The researcher will need to examine documents, datasets, or other evidence closely to confirm whether the statement is true or false. In cases where the statement is only partially true, or the researcher has doubts about how far the statement applies, they may answer “Partially.” In most cases, elements have supplementary questions that ask the researcher to provide sources or justifications.

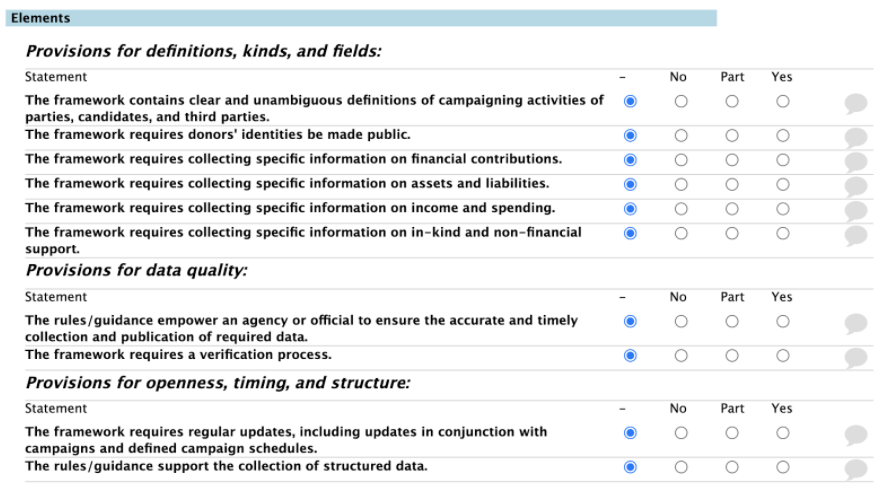

An example of an elements section is shown above. Each statement is on one line, accompanied by radio boxes for selecting “No,” “Part” (Partially), or “Yes.” The “–” column indicates whether a question has been answered. To complete an indicator, all element sub-questions must be answered. As a researcher answers sub-questions, depending on the answers provided, conditional text boxes may ask for links or short text justifications.

Element sub-questions are clustered into groups; these are typically organized from larger questions that address the focus of the indicator, to narrower questions related to the indicator’s focus, to broader data questions and how they apply to this indicator. For example, in an availability question, you might find three groups of element sub-questions, named kinds of data, data fields and quality, and data openness, timing, and structure. Usually there will be two or three such named groups; the final group will be a repeating set of sub-questions that are almost always exactly the same.

Some indicators also include element sub-questions that investigate barriers or blocks—for example, looking at whether the availability of relevant data was affected by COVID-19. These elements are located at the very end of the elements section.

When to answer “Partially”¶

For some element sub-questions, we have provided specific guidance about what criteria justifies a “Partially” answer; this will display beneath the sub-question in italics. In many others we have left what constitutes a “Partially” answer open in order to remain flexible to the wide variance across countries and governments.

Sometimes a single element sub-question will ask about the presence of multiple types of provisions, information, or activities. In this case, researchers should mark “Partially” when only some of the types are present and note which ones in the response box.

For example, one of the sub-questions for the indicator on the availability of Civil Registration and Vital Statistics (CRVS) data asks researchers whether mortality information includes data about age, sex and/or gender, geographic location, and cause of death. A country that only makes available age data should be marked “Partially;” a country that makes available data about age, sex and/or gender, and cause of death (but not data about geographic location) should also be marked “Partially.” Researchers should explain in the corresponding response box which mortality data they found to be available.

Handling multiple or fragmentary evidence (elements)¶

Again, sometimes answers to an indicator’s sub-questions will be spread out across multiple sources. As noted above, to answer the existence sub-question of an indicator in such a case, you must identify all of the relevant components in the overall justification box. For corresponding element sub-questions, please also indicate in each individual response box which specific policy, dataset, etc. holds that answer.

For availability indicators, when you are working with answers spread out across multiple datasets, use the whole set to assess sub-questions about kinds of data and data fields and quality. However, in alignment with our bright spots design, when you assess data openness, timing, and structure, pick the most complete or comprehensive dataset and explain which one you have assessed in the justification box. Note that “completeness” or “comprehensiveness” here refers to the features assessed as part of the elements checklist. In some cases these individual datasets may not be the most complete in terms of geographic or jurisdictional coverage; this will be addressed in your answer to the indicator’s extent sub-question, which assesses the entire collection of datasets.

For related information, see also the section on “Handling multiple or fragmentary evidence (existence).”

Data standards¶

Some governance and availability indicators include a sub-question that asks you to assess whether data is required to follow or follows standards. Such standards establish common formats for describing, recording, and publishing data, making it easier for more actors to use and reuse data.

For some kinds of data, there is widespread international agreement on which standards should be used. In these cases, the relevant standard is specified in the guidance for the specific indicator, to help researchers know which standard can be scored as “Yes.” Some kinds of data, however, do not yet have widespread agreement on standards. In these cases, when indicators ask about standards, researchers should assess if any standard at all is used.

Sex and/or gender¶

Some of our element sub-questions ask about “sex and/or gender.” As noted in the “Equity and Inclusion” section of the handbook (within “Cross-cutting Issues”), while sex and gender are increasingly recognized as spectrums not dependent on each other, some organizations and cultures use sex and gender interchangeably. Consequently, in many cases, sex data is listed under the heading of gender, obscuring or blurring gender data.

When a researcher finds that both sex and gender data are available, sources for each should be provided and researchers should note this in the justification box. Identifying examples of either one, however, constitutes a “Yes” answer to such element sub-questions.

Marginalized populations¶

While marginalization occurs around the world, the specifics of marginalization vary within each country. At the beginning of the survey researchers are asked to identify patterns of marginalization in their country. Regional coordinators should check these to make sure they are comprehensive. Sub-questions that ask about marginalized populations should be cross-checked against this list.

Artificial intelligence¶

To explore artificial intelligence as a cross-cutting issue, some of the Barometer’s governance and use indicators include sub-questions that ask about artificial intelligence and machine learning. The definition of artificial intelligence varies depending on the context of its use; we have designed our indicators to be flexible to this fact.

For governance indicators, we expect the frameworks to provide a definition, probably drawing from other relevant national or regional laws. To respond to these sub-questions, researchers don’t need to assess the definition given against an established one, but only to explore if the issue is addressed, and then briefly describe how.

The relevant use indicators explore if identified datasets have been used with artificial intelligence or machine learning. Here, the researcher should start by identifying the uses the indicator examines and then look for explanations of the processes involved in these uses for mention of these technologies.

Assessing barriers: COVID-19 and missing data¶

A small number of element sub-questions investigate barriers or blocks to data policies and practices, in relation to COVID-19 or missing data.

One of the sub-questions related to COVID-19 is in a governance indicator, used only once; otherwise, most of the availability indicators ask a sub-question about the effect of COVID-19 on data availability (this sub-question is always the same). Wherever possible, we provide resources that have already assessed relevant COVID-19–related effects on data policies or practices in an individual indicator’s research guidance.

Where no such resource is provided for an availability indicator—or where resources are not sufficiently comprehensive—the researcher should pursue two strategies: For both strategies, start by identifying where the relevant data has been located in the past or should be located and check first for announcements of relevant COVID-related changes. If there are no such announcements, first, conduct web searches for news articles reporting on COVID-related changes in collecting or publishing data in your country. Second, using the Wayback Machine of the Internet Archive, compare what the relevant site looked like in 2017, 2018, 2019, 2020, and 2021 to see if you can identify a pattern that has been disrupted in conjunction with the pandemic. Document your research steps in the research journal and explain your conclusions in the justification box.

Each availability indicator also asks about whether or not there is evidence of missing data. In some cases, the Barometer’s availability indicators pair directly with a related governance indicator. In those cases, assess against the data requirements of the relevant governing framework. In other cases, the indicator itself identifies a dataset(s) to assess against.

In cases where there is no such identified dataset or related governance indicator, assess based on the parameters laid out in the publication of the information (e.g., are some fields entirely empty when they shouldn't be?), your local knowledge (e.g., if the data is supposed to include information for all public officials, does the number of total entries look right?), and any broader research you may have done for this theme (e.g., have media articles exposed the incompleteness of the data?).

Extent Sub-questions¶

An indicator's extent sub-question is designed to explore whether what is being evaluated demonstrates comprehensive coverage or whether it has limitations. For example, a capability indicator’s extent sub-question might ask whether a country’s data science training is available across the country or only in a limited number of locations; an availability indicator’s extent sub-question might ask whether a dataset that scores highly in the elements checklist is an exceptional outlier in a federal system or an example of the norm.

The research guidance for individual indicators includes details on any specific coverage concerns. See also the preceding section on “Locating the best example” for guidance on how to approach coverage when selecting examples to evaluate.

Meaningful positive impact¶

The Barometer’s use indicators include an extent sub-question that asks whether there is evidence that the identified uses have had or are having meaningful positive impacts. To answer this, researchers should first look for evidence that reports on or contextualizes an identified use’s impacts. Sometimes the evidence of the use will also include evidence of its impact, sometimes it will require additional research.

For example, if an investigative journalist has used lobbying data to expose government corruption, the researcher should look for evidence that this use has prompted action or response of some kind. This might include, for example, charges brought against officials, the launch of a committee to investigate or establish reforms, a poll that shows that this information has changed public opinion, etc.

Second, researchers should exercise their expert judgement as to whether the impacts associated with this use have been primarily cosmetic (lacking the substance to be either positive or negative), positive, or negative (causing identifiable harms). In the justification box, explain your reasoning and provide any available evidence.

Supporting Evidence¶

The supporting evidence section allows you to attach up to five structured links or files that should be published alongside the justification and indicator data, that provide evidence for the given assessment and that can be checked by reviewers. This might include:

- Links to policies, strategies, or laws for a governance indicator;

- Links to datasets for an availability indicator;

- Links to reports or discussions on data capability;

- Copies of PDF reports where they cannot be linked to online;

- Links to academic papers.

Evidence should be in the public domain and should not include private documents. Each item of evidence can have a title and either attached file or URL.

Choose the five most important sources to attach as structured evidence. In the justification box, cite all of the relevant sources.

Justifications & Sources¶

For every indicator, researchers should provide a list of the supporting evidence used and write a short prose explanation of the example assessed, and how certain judgements have been made.

Justification¶

The justification should include brief notes to support the assessments made, citing numbered items of supporting evidence to back up each key point. This justification is first used by the reviewers to check your assessment, and is then published alongside the raw data from the survey to support re-users of the data to understand the basis for each assessment.

Justifications should be written in clear English prose that does not use first person, but rather is written in a neutral impersonal way. Use a spellchecker or grammar checker if required.

You will need to create your own justifications based on the findings of your research, using content quotations when appropriate to support your argument but not relying solely on them. All justifications need to be self-contained and self-explanatory, with no cross-references between them. While sources must be cited to support justifications, a reader should be able to fully understand the justification without looking at the supporting evidence.

The following example is a real justification provided by a researcher for the ODB:

For example:

"In 2017, Government of Canada's Social Sciences and Humanities Research Council (SSHRC) partnered with Compute Canada, Ontario Centres of Excellence and ThinkData Works launched the first “Human Dimensions Open Data Challenge”, organized as part of SSHRC’s Imagining Canada’s Future initiative (1). On 23 May 2017, two winning teams were awarded $3,000, and, as part of the challenge, will be provided access to Compute Canada cloud resources for the remainder of the calendar year. The top team was awarded an additional $5,000 and given four tickets and an invitation to present at the High Performance Computing Symposium (HPCS) on 5-9 June in Kingston, Ontario (2). In October 2017, Canada’s Open Data Exchange (ODX) has launched their second investment into the Ontario startup ecosystem through the ODX Ventures program. Through a planned $438,000 investment into 10 Ontario-based companies, the program aims to accelerate data-focused projects which have traditionally struggled to secure funding. In December 2016, the first ODX Ventures cohort included $400,000 worth of investment across eight startups, and to date, has led to 26 unique contractors developing work in the open data space (3). To support a culture of innovation, there is an online apps gallery hosts mobile apps, web apps and visualizations made with federal data (4). There is also a Community Page where dataset, discussions and apps are gathered based on interest (e.g. agriculture, education, nature and environment).

Sources¶

(1): http://www.sshrc-crsh.gc.ca/society-societe/community-communite/hdodc-ddodh-eng.aspx (2): https://www.computecanada.ca/events/human-dimensions-open-data-challenge/ (3): https://codx.ca/odx-invests-in-ontario-open-data-ventures/ (4): http://open.canada.ca/en/apps "

You should always provide a justification, even when you answer “No” to an existence question.

Citation guidance¶

Citing desk research and interviews¶

Selected sources should always reflect the project’s study period.

- Please use exactly the following format when citing interviews: Interview sources must include the full name of the interviewee, the name of the interviewee’s employer, and job title. Example: Interview with Jane Doe, Ministry of Justice, Director General.

- Anonymity: When it is not feasible to publish the name of an interviewee, out of justified fear for the interviewee’s safety or negative professional ramifications, please include the name of the interviewee in the research journal. In the justifications box, simply state “anonymous” for the interviewee name while providing as many other details as possible (e.g., Anonymous, Ministry of Finance, government official). The Barometer will maintain confidentiality; no names provided in the research journal for anonymous sources will be published.

Researchers should exercise professional judgment to determine whether opinions of an interviewee are factual and accurate. We strongly suggest researchers corroborate information obtained in interviews with desk research and do not rely on a single personal opinion.

- Please use the following format when citing media articles: name of author, name of publication, title of article, date published, and a URL/hyperlink or a digital/PDF attachment if a link is not available or has expired.

- Please use the following format when citing journal articles, written documents, or other third-party desk research: provide the full citation: name of author(s), year of publication, title, journal/publishing house; whenever possible please also provide a URL/hyperlink or attach an electronic copy if a link is unavailable. For example: Berg, Janina and Daniel Freund. 2015. EU legislative footprint: What’s the real influence of lobbying? _Transparency International EU _https://transparency.eu/wp-content/uploads/2016/09/Transparency-05-small-text-web-1.pdf

Citing laws or regulations¶

- Researchers must identify the law (full name, article, etc.), case law, specific legal articles, or statutes and provide a direct quote or a detailed explanation of the law in the comments box, as needed.

- Researchers must refer, where appropriate, to case law, specific legal articles, or statutes.

- Researchers are to reflect and describe applicable traditional and customary law when necessary.

- Laws cited must be domestic laws rather than international law.

- Please use this specific format when citing laws or regulations: name of law, article, section number, year, hyperlink to law. Example: Constitution Acts, Part I: Canadian Charter of Rights and Freedoms, Section 6. 1982. http://laws.justice.gc.ca/eng/Const/page-11.html#sc:7:s_6

The Research Journal¶

For each indicator, the survey tool provides a research journal. Researchers should use this to briefly record the steps they take to carry out their research. The research journal is not published, but is used to support the review process. The research journal holds particular relevance when reviewing indicators where no evidence of existence was found, since reviewers will need to inspect the research process followed to reach this conclusion.

For example...

In this example, the research journal entry describes the steps taken to respond to an indicator. "Followed source guidance to look at DLA Piper Data Protection Laws database & Greanleaf tables of privacy laws: used this to review the element sub-questions. Searched with Google for “UK Data Protection Migrants” based on past awareness of an “immigration exemption” and looked at the first three results. Sources used included in the supporting evidence section. Searched for “Data protection COVID-19” and reviewed content from the ICO: looked for the best source to use, and confirmed dates of documents. Checked results when talking with a civil society informant on June 22nd to discuss a number of different questions. Discussed whether migrants' judgement should be “No” or “Partially” — see justification for conclusion."